Why so simple?#

Coming out of this month’s grpconf, it seemed worthwhile to do some in-depth exploration of gRPC, particularly related to how it is used by and supported by IO.

I had made some assumptions about grpc-go and the connect-go implementation of connectrpc, and about performance benefits that I expected to get by using Linux abstract sockets, but hadn’t made any direct measurements.

To make those measurements, I wanted to use an API that was as simple as possible and that used all four gRPC streaming modes: unary, client streaming, server streaming, and bidirectional streaming. Simplicity would make it easier to implement this API with both grpc-go and connect-go and possibly in other languages besides Go.

I considered a few interesting candidates:

- The Route Guide API. This is part of the official gRPC documentation, but even the small amount of application context that it introduces seemed too much for me.

- The GAPIC Showcase API. This is a nice demo API that was created by a team that I managed at Google. We were building code generators that produced client libraries (“Generated API Clients”) and created this API to demonstrate various aspects of the client library generation. But this also seemed too much, because I really just wanted to focus on transport and the most basic clients.

- One of the components of the GAPIC Showcase API was the Echo API that I created when I started work on gRPC Swift. This had the four methods that I needed and became the Echo API in agentio/echo-go.

The Echo API#

The Protocol Buffer description is simple enough to completely include below:

syntax = "proto3";

package echo.v1;

option go_package = "github.com/agentio/echo-go/genproto/echopb;echopb";

service Echo {

// Immediately returns an echo of a request.

rpc Get(EchoRequest) returns (EchoResponse) {}

// Splits a request into words and returns each word in a stream of messages.

rpc Expand(EchoRequest) returns (stream EchoResponse) {}

// Collects a stream of messages and returns them concatenated when the caller closes.

rpc Collect(stream EchoRequest) returns (EchoResponse) {}

// Streams updates by replying with messages as they are received in an input stream.

rpc Update(stream EchoRequest) returns (stream EchoResponse) {}

}

message EchoRequest {

// The text of a message to be echoed.

string text = 1;

}

message EchoResponse {

// The text of an echo response.

string text = 1;

}

The agentio/echo-go repository contains this proto and a command-line tool that includes servers and clients for grpc, connect, connect-grpc, and connect-grpc-web.

To run the server, use echo-go serve grpc. This invocation starts a gRPC server on the Linux abstract socket named @echo:

$ echo-go serve grpc --socket @echo

The get method just returns the message that is sent by the client.

$ echo-go call get --address unix:@echo --message hello

{"text":"Go echo get: hello"}

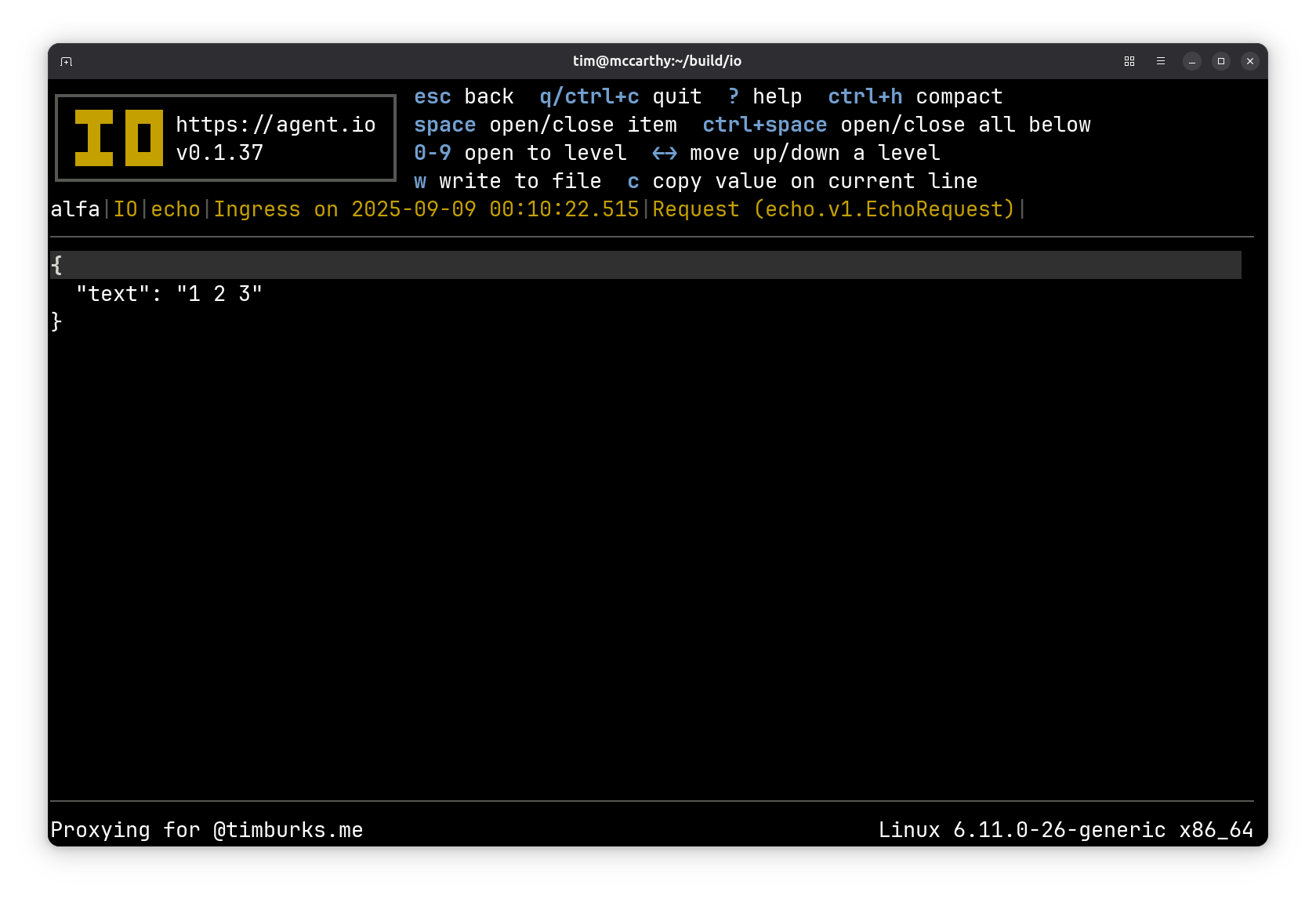

The expand method breaks the message that it receives into words and sends each word in a stream of separate messages.

$ echo-go call expand --address unix:@echo --message "1 2 3"

{"text":"Go echo expand (0): 1"}

{"text":"Go echo expand (1): 2"}

{"text":"Go echo expand (2): 3"}

collect collects a stream of messages into a single response. The CLI command just sends the same message a specified number of times.

$ echo-go call collect --address unix:@echo --count 3 --message hello

{"text":"Go echo collect: hello 0 hello 1 hello 2"}

Finally, the update CLI command sends a message a specified number of times and receives the corresponding messages from the server.

$ echo-go call update --address unix:@echo --count 3 --message hello

{"text":"Go echo update (1): hello 0"}

{"text":"Go echo update (2): hello 1"}

{"text":"Go echo update (3): hello 2"}

The --address and --stack options let us call each method with different technologies and libraries. Here we are using the Connect protocol to call a service running on a local port:

$ echo-go call get --address localhost:8080 --stack connect

{"text":"Go echo get: hello"}

The repo includes tests that verify all server-client combinations.

$ go test -v .

=== RUN TestSocketGrpcServiceGrpcClient

--- PASS: TestSocketGrpcServiceGrpcClient (0.10s)

=== RUN TestSocketGrpcServiceConnectGrpcClient

--- PASS: TestSocketGrpcServiceConnectGrpcClient (0.10s)

=== RUN TestSocketConnectServiceGrpcClient

--- PASS: TestSocketConnectServiceGrpcClient (0.10s)

=== RUN TestSocketConnectServiceConnectClient

--- PASS: TestSocketConnectServiceConnectClient (0.11s)

=== RUN TestSocketConnectServiceConnectGrpcClient

--- PASS: TestSocketConnectServiceConnectGrpcClient (0.11s)

=== RUN TestSocketConnectServiceConnectGrpcWebClient

--- PASS: TestSocketConnectServiceConnectGrpcWebClient (0.11s)

=== RUN TestLocalGrpcServiceGrpcClient

--- PASS: TestLocalGrpcServiceGrpcClient (0.11s)

=== RUN TestLocalGrpcServiceConnectGrpcClient

--- PASS: TestLocalGrpcServiceConnectGrpcClient (0.10s)

=== RUN TestLocalConnectServiceGrpcClient

--- PASS: TestLocalConnectServiceGrpcClient (0.11s)

=== RUN TestLocalConnectServiceConnectClient

--- PASS: TestLocalConnectServiceConnectClient (0.11s)

=== RUN TestLocalConnectServiceConnectGrpcClient

--- PASS: TestLocalConnectServiceConnectGrpcClient (0.11s)

=== RUN TestLocalConnectServiceConnectGrpcWebClient

--- PASS: TestLocalConnectServiceConnectGrpcWebClient (0.11s)

PASS

ok github.com/agentio/echo-go 1.282s

You’ll note that I tested both gRPC and connect servers running on local ports and abstract sockets with all compatible client types.

Comparing grpc-go and connect-go side-by-side#

The agentio/echo-go repo contains implementations of servers and clients using both grpc-go and connect-go. Explore them directly if you are interested. The differences are small, but enough that code can’t be trivially switched from one library to the other.

Deploying the Echo server#

The Echo server can be run locally or in a docker image that can be built with the Dockerfile. I’ve published an image on Dockerhub at agentio/echo and am running it in one of my Nomad clusters using the following job description:

job "echo" {

datacenters = ["dc1"]

type = "service"

group "echo" {

count = 1

network {

port "http" { to = 8080 }

}

service {

name = "echo"

provider = "nomad"

port = "http"

}

task "echo" {

driver = "docker"

config {

image = "agentio/echo:latest"

ports = ["http"]

force_pull = true

}

resources {

cpu = 100

memory = 50

}

}

}

}

Since I use IO as the ingress for my Nomad cluster, I make the echo server available with a simple IO ingress:

ingress "echo.babu.dev" {

name = "echo"

backend = "nomad:echo"

protocol = "http2"

}

Visualizing traffic#

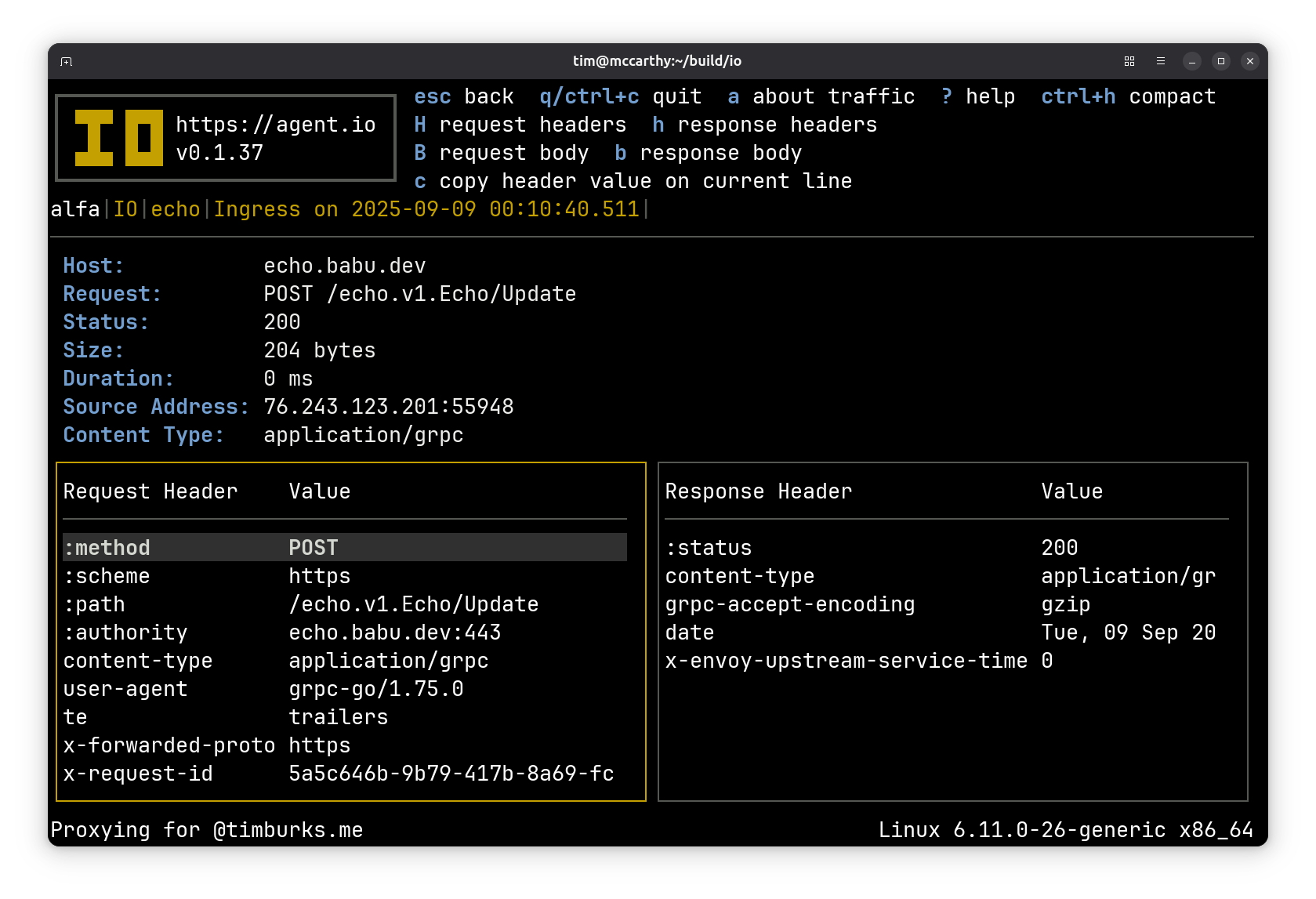

With IO as my ingress, I can observe traffic to the echo server. The Echo service turned out to be a great example to verify traffic logging and viewing for all four gRPC modes and the various forms of connectrpc.

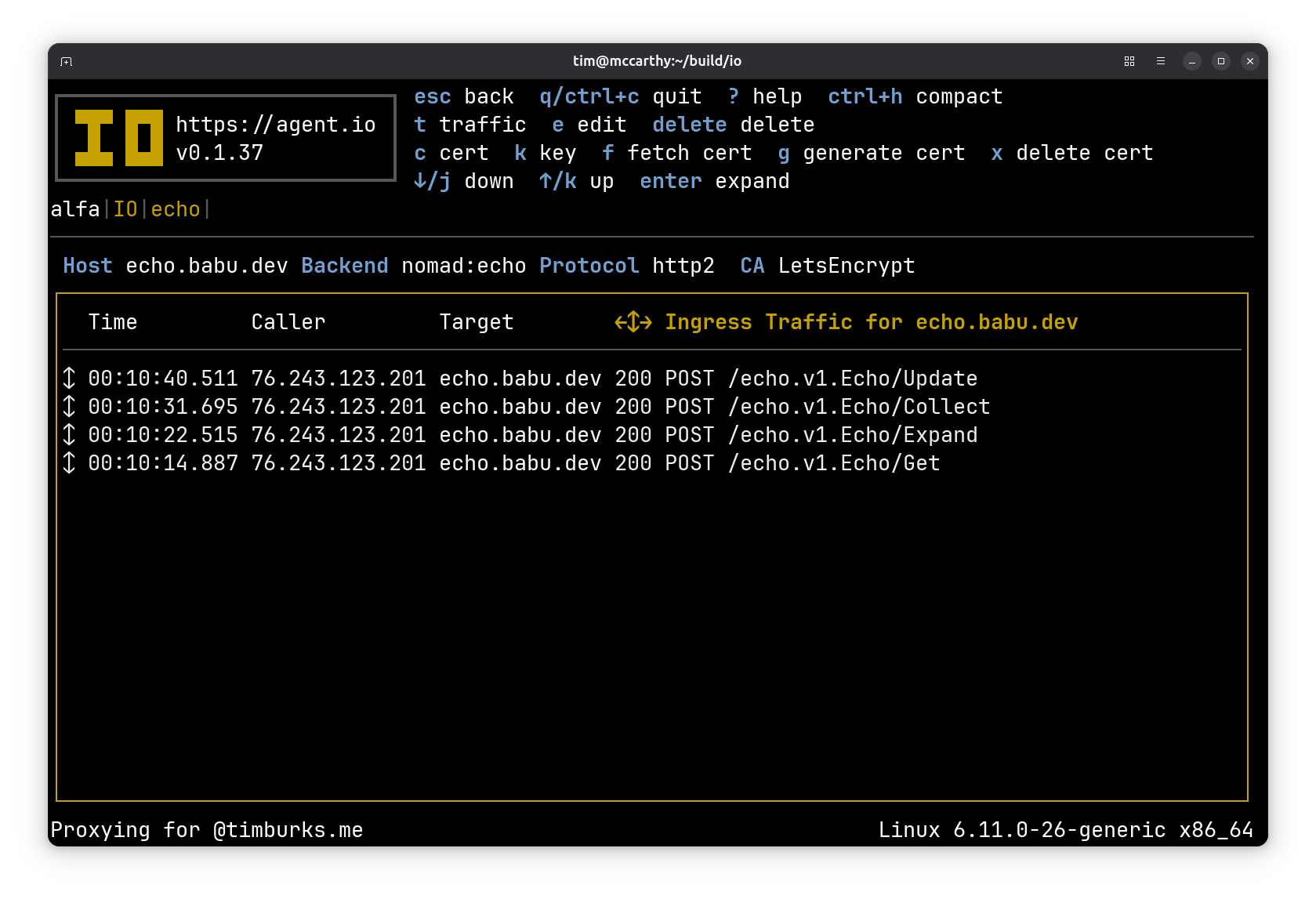

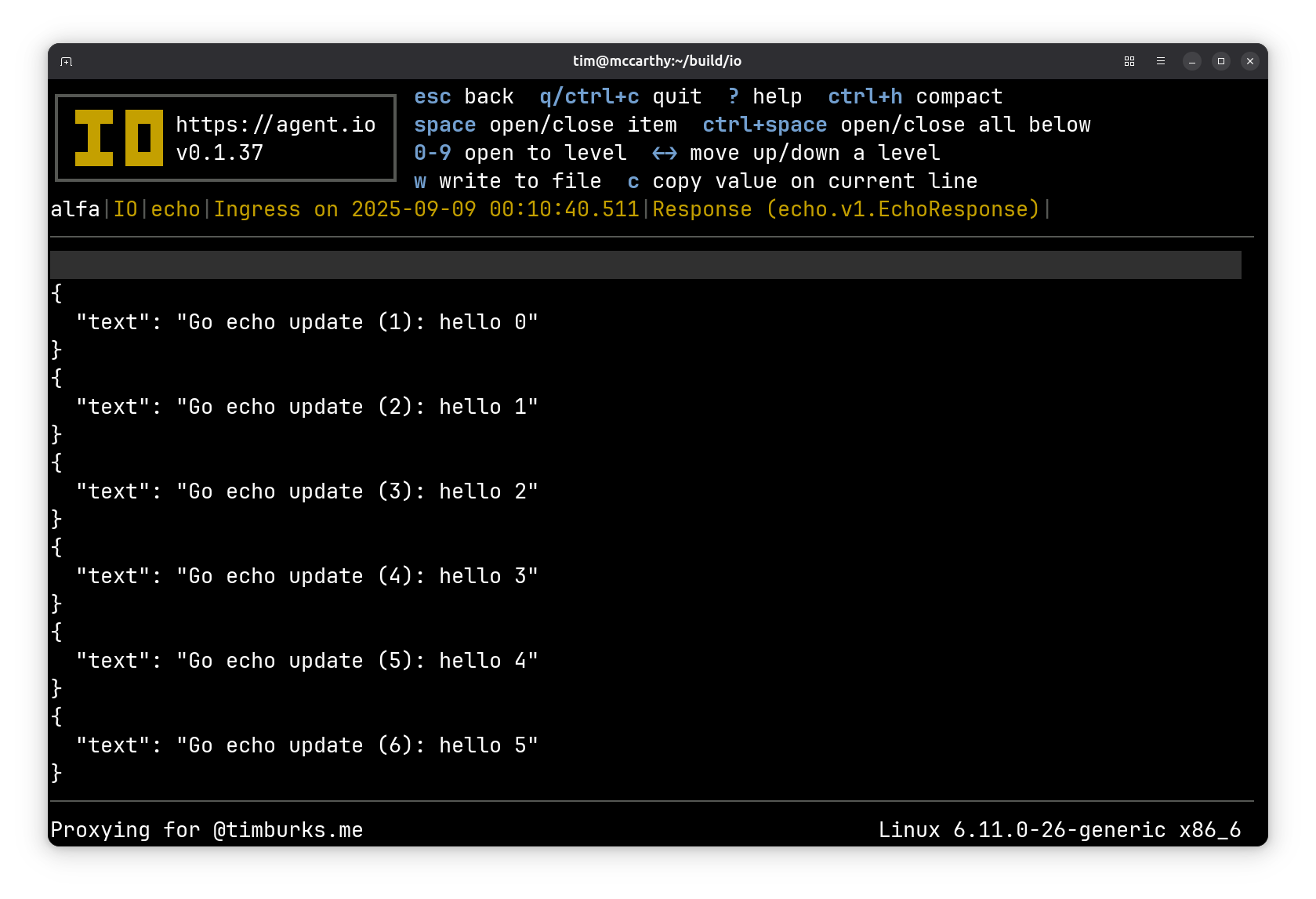

To illustrate this, here is an IO traffic log after I’ve used the echo-go client to make four requests to the Echo service. The first one is at the bottom, a call to get.

Expanding the

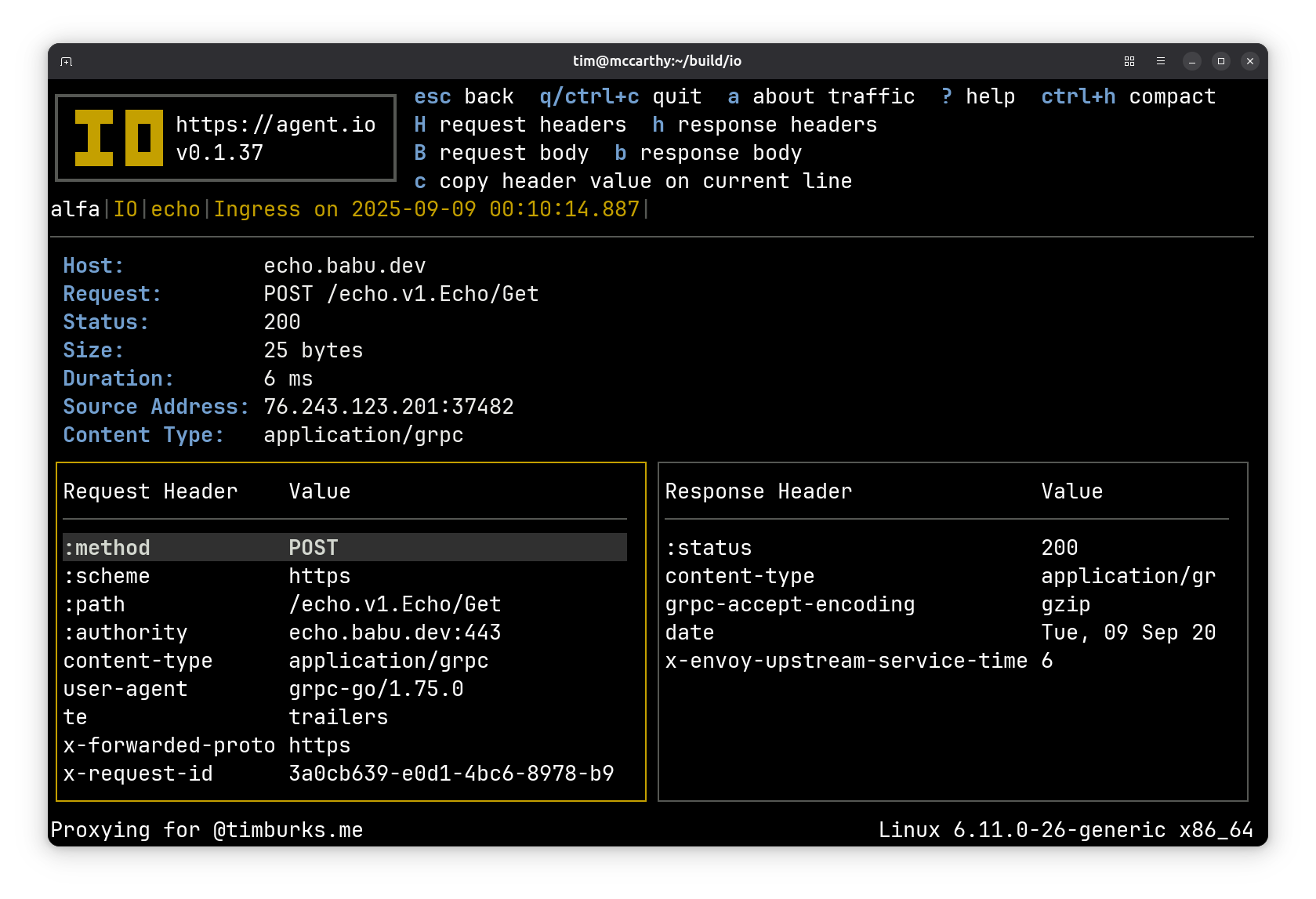

Expanding the get entry, we can see the request and response headers.

Pressing

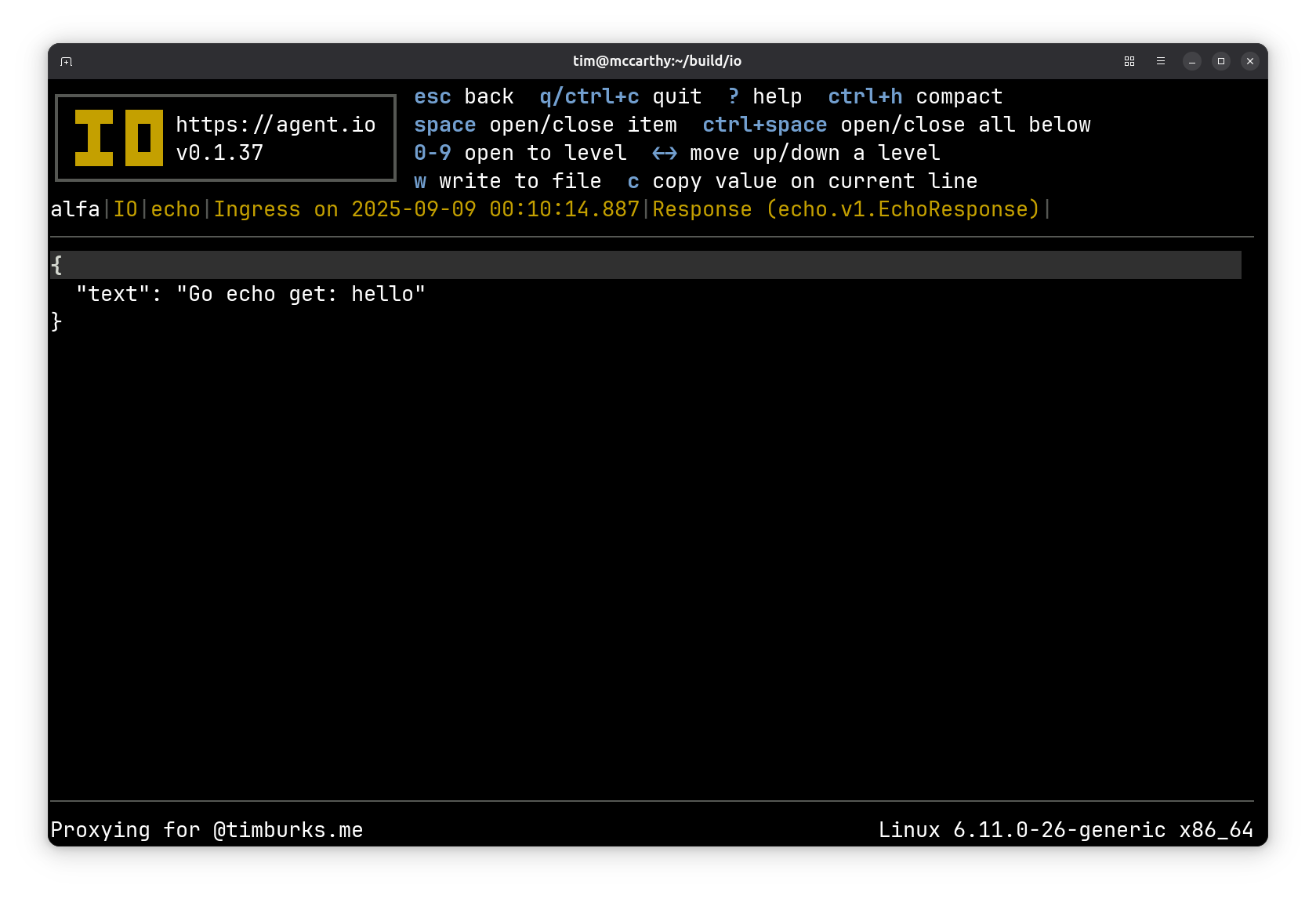

Pressing b on this screen displays the response body.

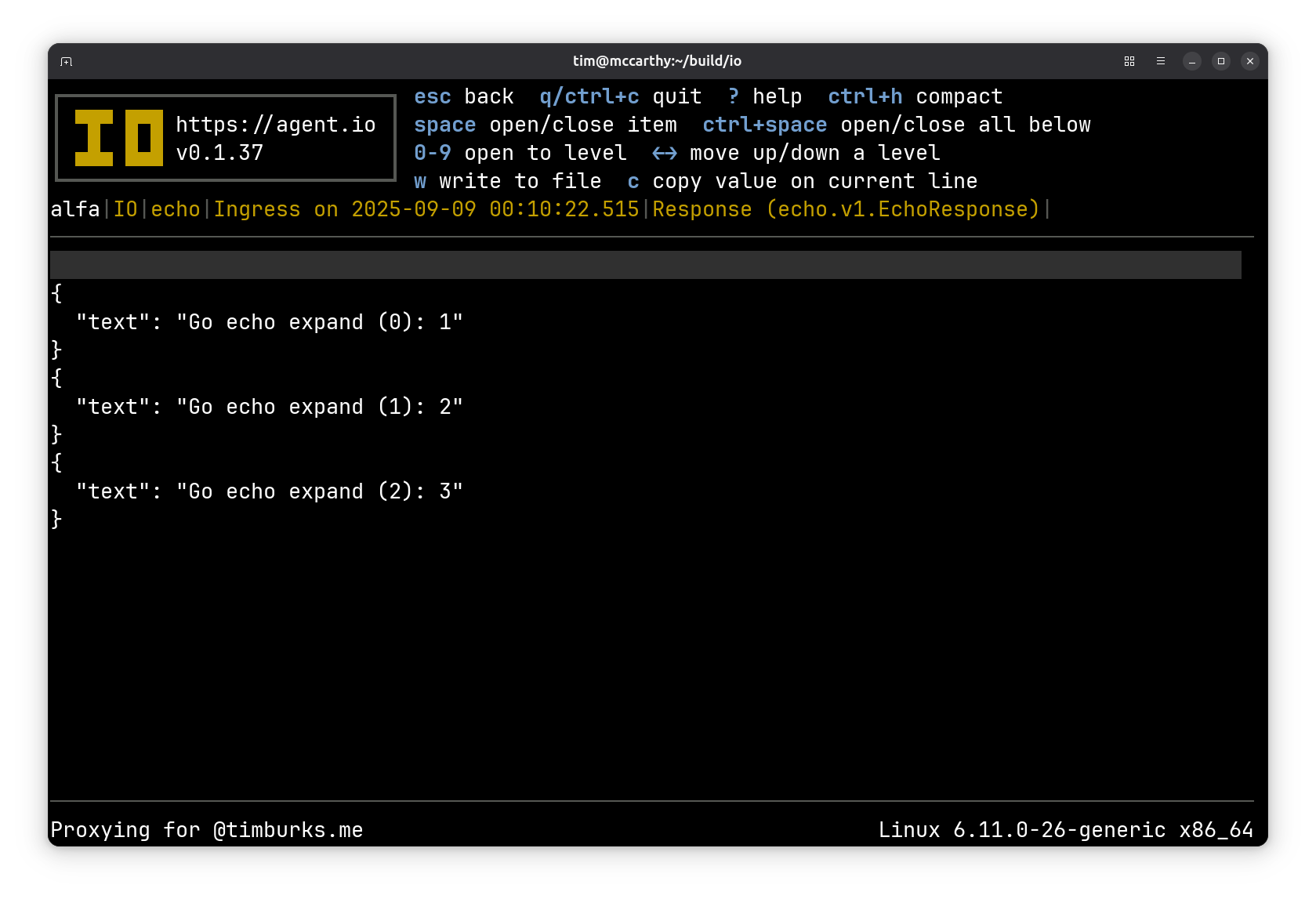

Going back up and descending into the

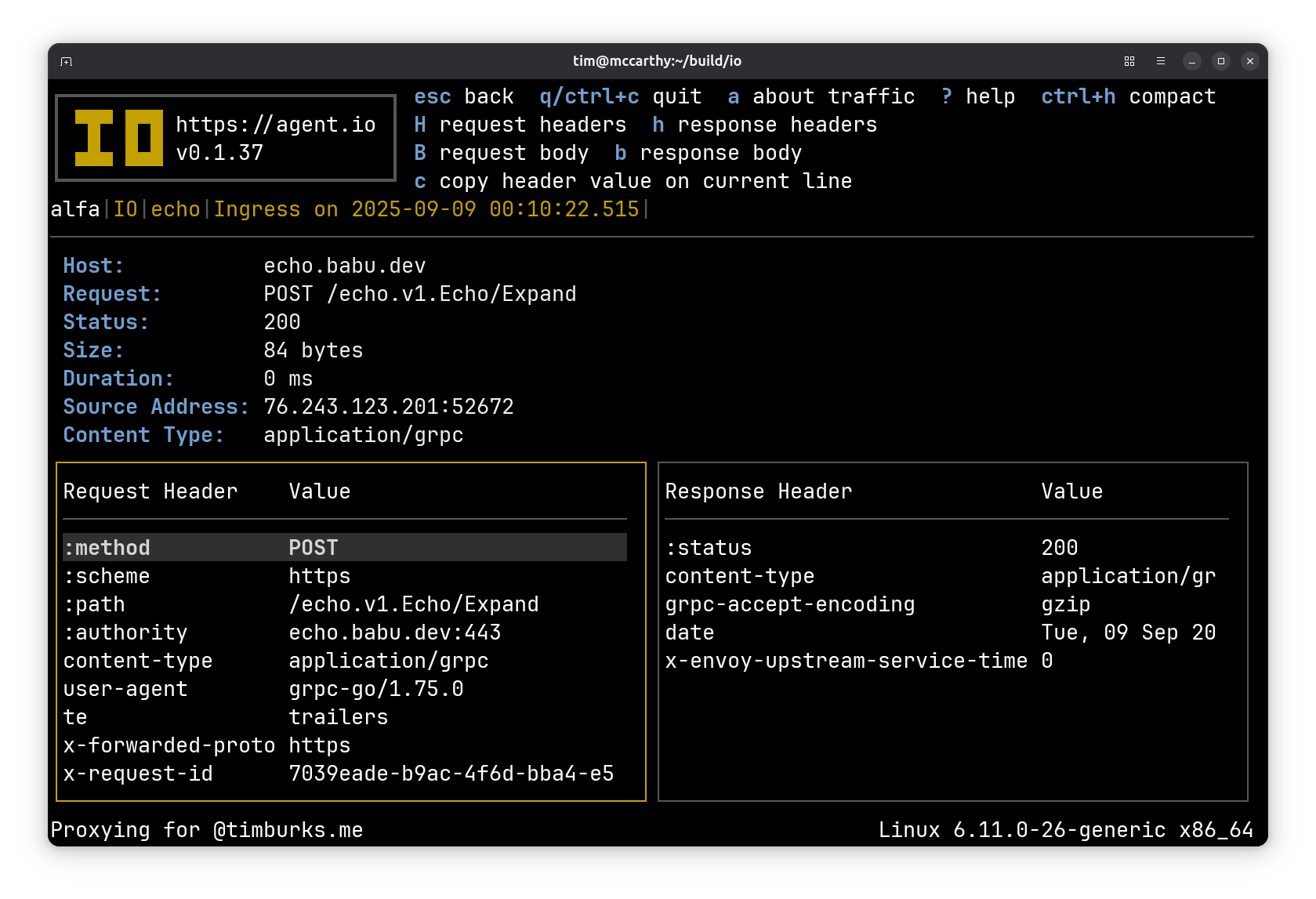

Going back up and descending into the expand entry, we see those headers and can use B to see the request body and b to see the response body:

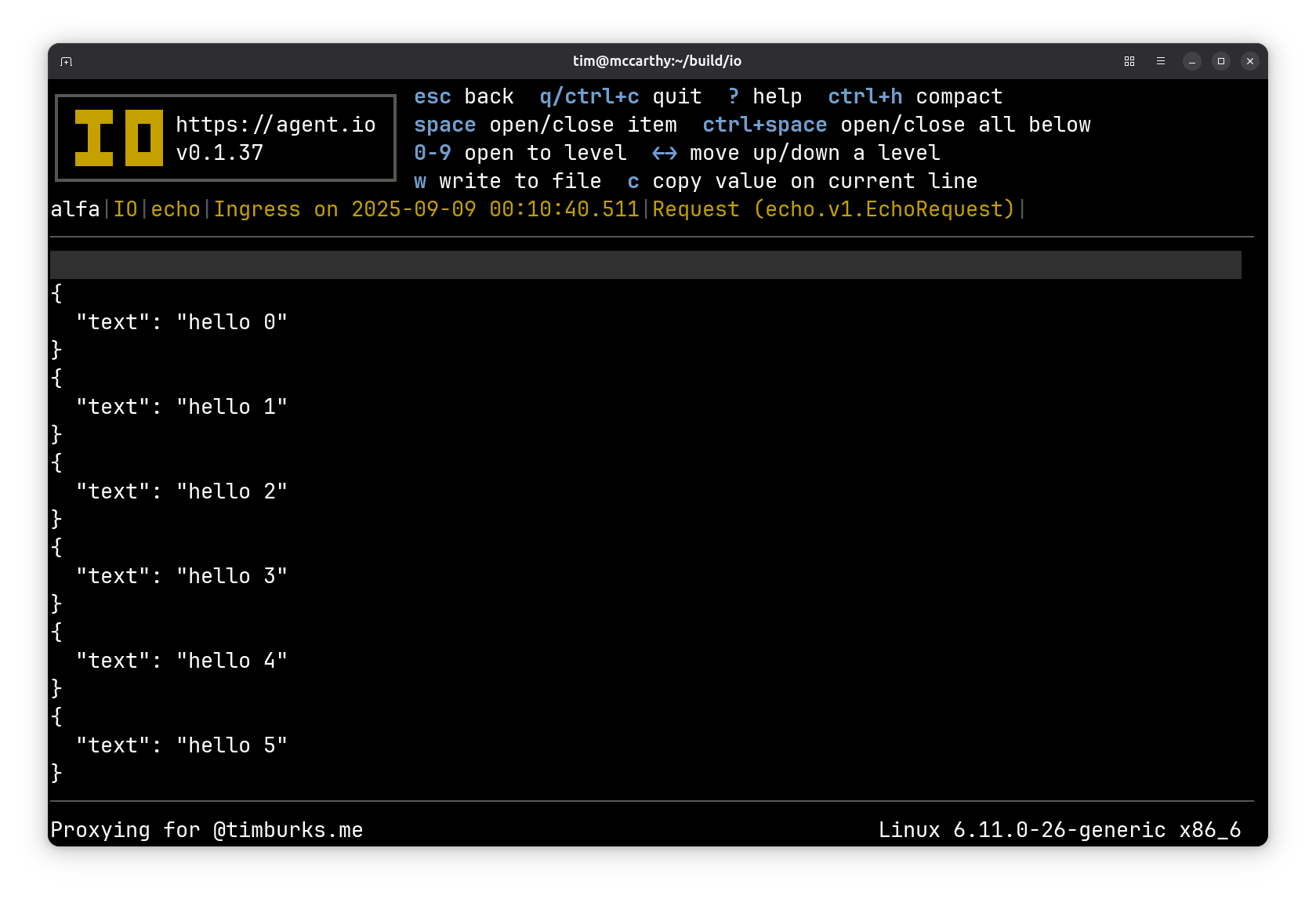

For one more example, here are the headers and request and response bodies for the

For one more example, here are the headers and request and response bodies for the update method.

You might be wondering, how did this work? IO is displaying JSON for these messages that are binary-encoded protocol buffers. The answer is that IO has an internal registry of descriptors, and we’ve copied the descriptors for the echo API into our IO. The first step was generating the descriptors with make descriptors in the repo. Then we copy the result into our running IO with SCP:

$ scp -P 8022 descriptor.pb alfa:

Now our IO running at alfa can decode binary protobuf messages for the Echo service.

Measuring performance#

The measure directory contains scripts that I use to measure calling times. They use a special -n option on each calling command that causes the command to run the specified number of times and print the average time per call upon completion. The scripts use that to build the table below:

| server | client | address | get | expand | collect | stream |

| grpc | grpc | unix:@echogrpc | 141.828µs | 148.312µs | 158.663µs | 192.409µs |

| grpc | connect-grpc | unix:@echogrpc | 176.321µs | 192.792µs | 257.729µs | 315.658µs |

| connect | grpc | unix:@echoconnect | 266.173µs | 298.701µs | 296.345µs | 430.672µs |

| connect | connect | unix:@echoconnect | 295.993µs | 594.902µs | 535.777µs | 1.082455ms |

| connect | connect-grpc | unix:@echoconnect | 342.745µs | 520.63µs | 448.378µs | 1.012712ms |

| connect | connect-grpc-web | unix:@echoconnect | 423.975µs | 615.365µs | 532.279µs | 1.118462ms |

| grpc | grpc | localhost:8080 | 178.835µs | 189.544µs | 190.661µs | 239.674µs |

| grpc | connect-grpc | localhost:8080 | 256.928µs | 272.041µs | 424.447µs | 607.799µs |

| connect | grpc | localhost:8080 | 391.694µs | 474.729µs | 430.915µs | 663.486µs |

| connect | connect | localhost:8080 | 382.058µs | 747.835µs | 672.614µs | 1.355382ms |

| connect | connect-grpc | localhost:8080 | 421.786µs | 644.886µs | 610.323µs | 1.254292ms |

| connect | connect-grpc-web | localhost:8080 | 534.861µs | 728.136µs | 683.19µs | 1.37581ms |

| grpc | grpc | alfa:8080 | 827.934µs | 783.206µs | 761.023µs | 840.768µs |

| grpc | connect-grpc | alfa:8080 | 1.206802ms | 1.139709ms | 1.731729ms | 2.168663ms |

All servers and clients were run on a Digital Ocean droplet. The final two rows illustrated a call from one droplet to another in the same project. All others are local connections on the same machine.

As I expected, the abstract socket connections were the fastest.

Unexpectedly (although I think I was naive here) I found the connect-go implementations consistently slower than grpc-go. It was less surprising for connect and connect-grpc-web, but even the connect-grpc client was slower, and in all side-by-side comparisons, the connect server was noticably slower than the gRPC server. This is consistent with the discussion in this GitHub issue: Is there a benchmark compare with original gprc?

If we just look at two rows, the difference is striking:

| server | client | address | get | expand | collect | update |

| grpc | grpc | unix:@echogrpc | 141.828µs | 148.312µs | 158.663µs | 192.409µs |

| connect | connect-grpc | unix:@echoconnect | 342.745µs | 520.63µs | 448.378µs | 1.012712ms |

Here we are comparing a gRPC server and client with a connect server and client, and both are communicating over sockets using the gRPC protocol. This seems like a good “apples to apples” comparison, and might be a useful starting point for configuring and trimming-down connect-go to rival the performance of grpc-go.

Removing the connect server and client allows us to zoom in on an observation that I wanted to make about IO’s architecture.

| server | client | address | get | expand | collect | update |

| grpc | grpc | unix:@echogrpc | 141.828µs | 148.312µs | 158.663µs | 192.409µs |

| grpc | grpc | localhost:8080 | 178.835µs | 189.544µs | 190.661µs | 239.674µs |

| grpc | grpc | alfa:8080 | 827.934µs | 783.206µs | 761.023µs | 840.768µs |

Focusing on the update (bidirectional streaming) call only, we see that machine-to-machine connections (third row) have significantly more overhead than local socket connections (first row). This points to IO’s use of the ext_proc API to control Envoy. The update call that we’ve configured makes six message exchanges, which corresponds to the heaviest ext_proc calls, and we can see that non-local controllers can have noticably more overhead, corresponding to the network delay between systems. While ext_proc is great for a local controller that’s communicating over a socket, it might be too slow for use by remote controllers, even here, where the ping times between our test machines were only a millisecond or so.

Conclusions#

These two observations lead me to consider reversing my prior decision to use connect in the implementation of IO:

- grpc-go clients and servers are faster

- grpc-go clients can easily be configured to connect to abstract sockets (it is possible with connect-go but requires some extra client-side work)

However, there is more to explore:

- Connect is using the HTTP/2 stack in the Go standard library, while grpc-go uses a custom HTTP/2 transport. Here simplicity might override the value of speed.

- My comparisons were made for sequential accesses, and under heavy loads, IO’s Envoy can make many concurrent calls to IO’s

ext_procserver, so concurrent request handling also warrants some comparison. - In other tests, I’ve observed Connect servers using compression by default, which isn’t appropriate for IO’s local socket-based control of Envoy. Turning off compression reduced my connect times by around 25% (not shown above).