IO is growing up to be a great way to manage and use gRPC APIs, but from the start, gRPC has been a core ingredient of IO.

IO controls Envoy with Envoy’s gRPC APIs.#

At its heart, IO is an Envoy controller, and IO controls Envoy using a set of gRPC APIs. Some of Envoy’s APIs are available in a simpler form that uses REST and JSON, but the best ones for use by a controller are the streaming APIs that are only available using gRPC.

As gRPC-based systems go, the IO-Envoy connection is relatively simple. It operates locally, with IO and Envoy running in the same process space and often in the same container. When IO starts, it launches its Envoy and configures it to call back to IO for configuration. To support these callbacks, IO runs a gRPC server, but instead of running this server on a network port, it serves on a Linux abstract socket. That’s fast and secure, and because IO needs almost none of the advanced features of the Go gRPC library, it instead uses Connect to implement its lean and lightweight server.

Envoy’s xDS APIs allow IO to configure Envoy.#

xDS is the name of a set of APIs that provide configuration to Envoy. The two that IO uses are LDS, the Listener Discovery Service, and CDS, the Cluster Discovery Service.

IO implements these APIs in a local server whose only client is the Envoy that it manages. To use them, Envoy connects to IO’s gRPC server with a streaming gRPC API call and sends configuration requests as messages. For each configuration request, IO replies with a configuration update. When no new configuration is available, IO blocks and waits until something new is available to send. With this, the Envoy instance keeps an open gRPC connection to IO and IO can send updates to reconfigure its Envoy at any time.

Envoy’s ext_proc API allows IO to observe and modify each network transaction.#

ext_proc is an API that lets a controller observe and modify API traffic through Envoy. For each request flowing through Envoy, Envoy can send up to six messages to its controller, allowing it to observe and modify the headers, bodies, and trailers of requests and responses. For remote controllers, this can be too expensive to be practical, but since IO runs locally and communicates over an abstract socket, this connection is close, fast, and powerful.

Just as it does with xDS, IO runs a gRPC server that exposes its ext_proc server and Envoy makes a streaming API call whenever it begins to handle a network request. IO responds to this, and then each of the five subsequent steps can optionally be observed and modified by messages streamed as Envoy handles the request through its lifecycle. This allows IO to do all kinds of observations and modifications to API traffic.

IO Serving Mode uses Google Service Infrastructure APIs.#

Until recently, IO has been calling Google’s Service Infrastructure APIs for configuration and control of serving mode operations. We originally called this with Google’s client libraries, which unfortunately provided incomplete support for Service Control, which led us to use the lower-level generated gRPC code, which in turn seemed difficult to use vs. the generated Connect code, so eventually we switched to using Connect to make these calls directly. In the near future, we expect to drop Service Infrastructure but may continue to use the Google API Key API, which we would directly call with Connect.

IO uses OpenTelemetry’s gRPC APIs.#

OpenTelemetry APIs are available using either HTTP or gRPC. IO calls OpenTelemetry with the gRPC service, which it calls using client code also generated with Connect.

IO does gRPC directly.#

Note that in all cases, IO gRPC clients and servers are implemented with Go code that is generated within the project. Message serialization code is generated by the Go protobuf plugin and network communication code is generated by the Connect plugin. This is keeping with our No SDKs decision and helps us avoid unpleasant surprises in third party code like diamond dependency conflicts.

Practically, we keep protos for all dependencies in the main IO repo and use a make target to produce all generated code at build time (generated code is not checked into the repo, no one should be doing that!)

IO manages gRPC API traffic.#

IO also is built to manage gRPC APIs. Using Service Infrastructure, IO configures Envoy’s gRPC transcoding by copying gRPC API descriptors from the Service Configuration into the Envoy configuration that activates transcoding. Currently this depends on Service Infrastructure, but in the near future we will add ways to configure this directly.

IO records and displays gRPC API traffic.#

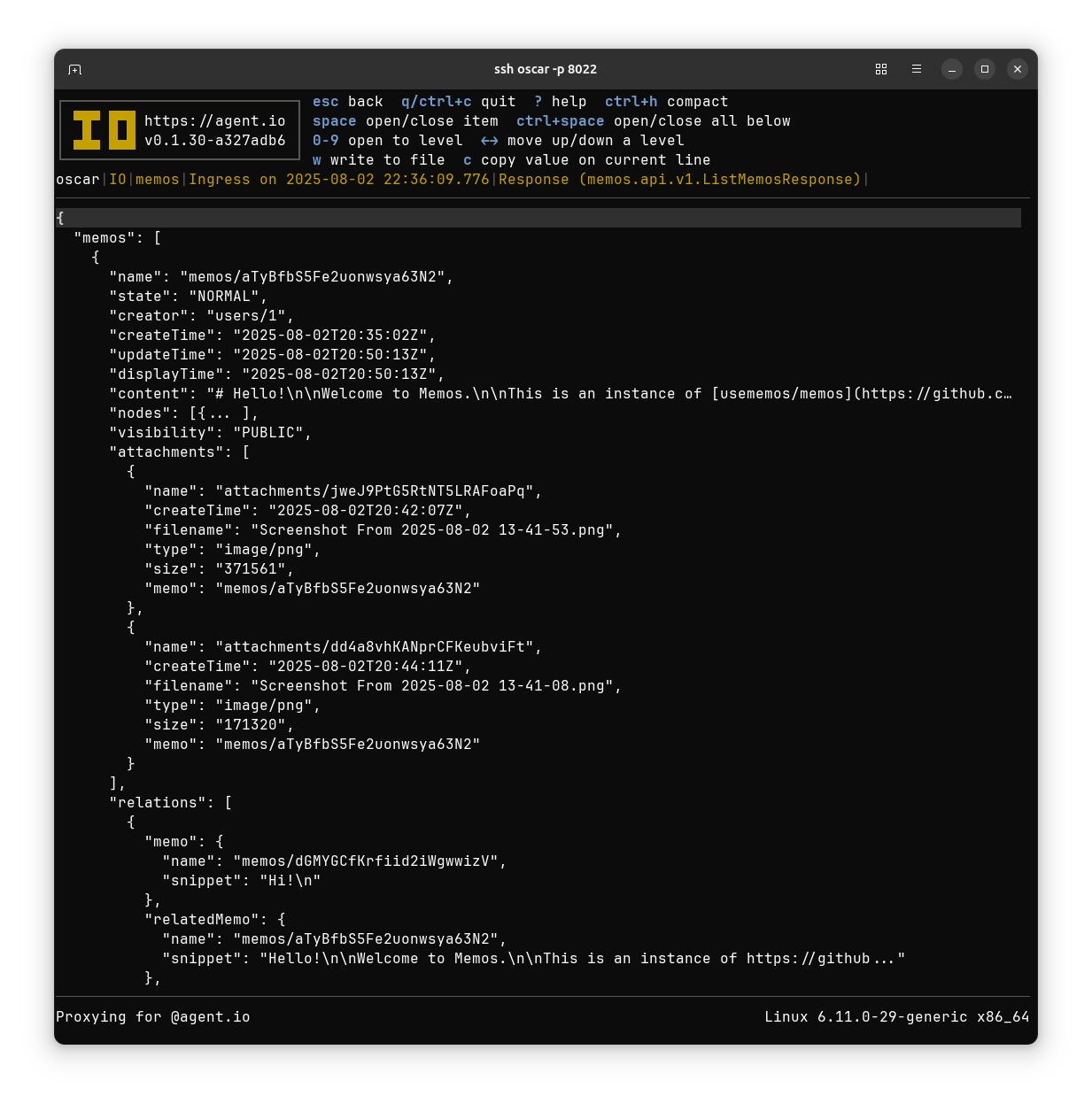

IO observes traffic, and by default, it saves traffic headers and bodies of requests and responses in its local SQLite database. The IO terminal UI allows these to be viewed, but when messages are binary-encoded protocol buffers, we need type information. We can get this from file descriptors. IO allows descriptors to be loaded into an internal database of descriptors that IO uses to convert binary-encoded protobuf messages to JSON for display. Here’s an example gRPC request body from These are my Memos on IO.